Published on :

Feb 10, 2026

Thought Leadership

PMO

At Coworked we think deeply about the transformation of "enterprise change" that is taking place in the enterprise due to agentic AI. This essay is based on a research memo our CEO & Cofounder, Shawn Harris, wrote on agentic project execution and the operating model shifts it forces.

Project management exists because coordination is expensive

The modern enterprise is basically a coordination machine. Firms exist because it is often cheaper to coordinate work inside an org than it is to coordinate through the market, where you pay transaction costs to discover information, negotiate, enforce, and align people.

For the last century, “project management” has been one of the enterprise’s main coordination engines: schedules, status reporting, information routing, governance meetings, escalation paths.

Agentic AI threatens the premise underneath all of it.

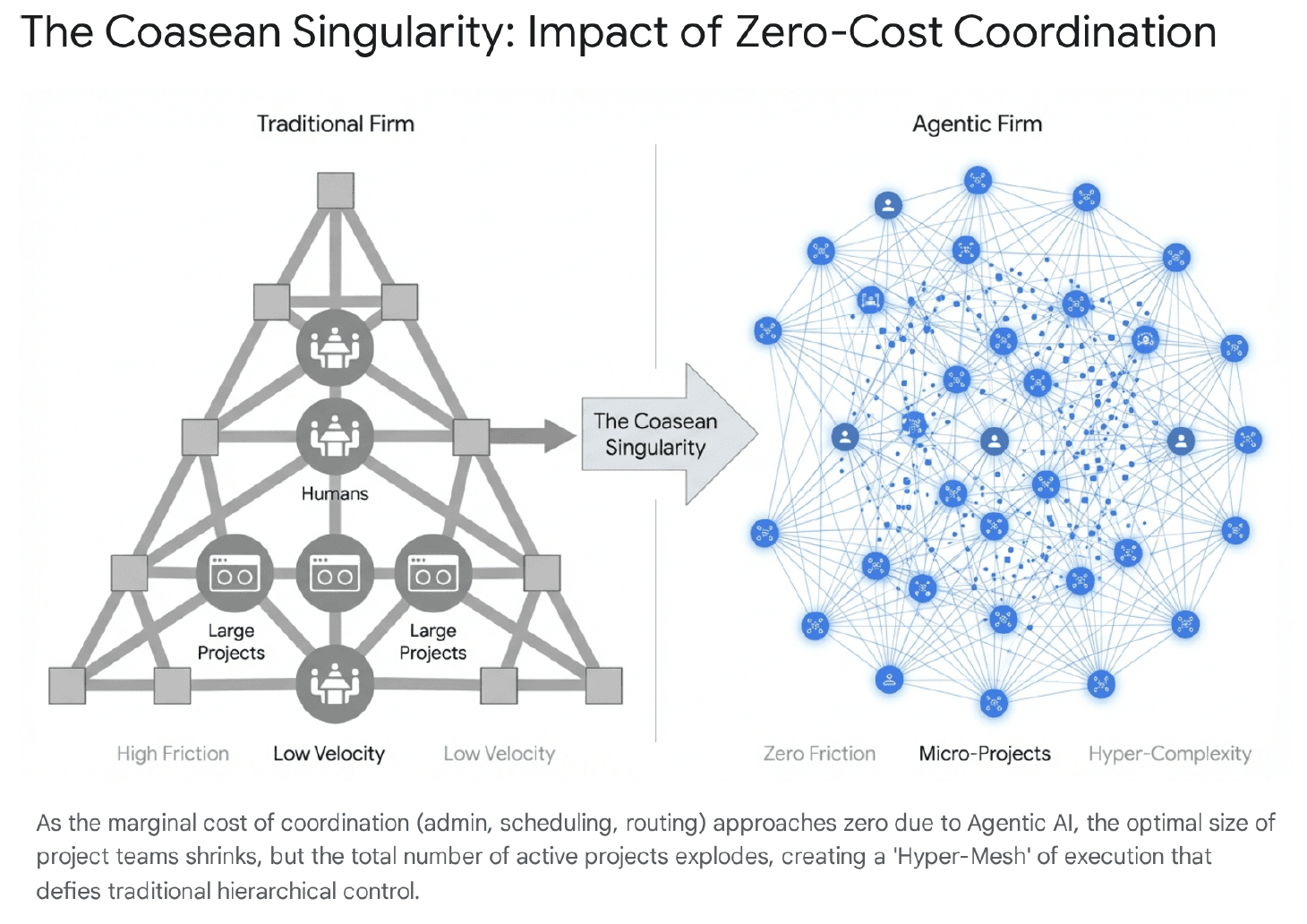

The core claim behind what I call the Coasean Singularity is that agentic AI drives the marginal cost of coordination toward zero, not by doing a few deterministic tasks faster, but by perceiving, reasoning, deciding, and acting in messy environments.

If that happens, it does not mean the enterprise becomes simpler. It means the enterprise becomes capable of creating and sustaining far more complexity than today’s governance can survive.

The counterintuitive thesis: lower coordination costs do not simplify the enterprise

Here is the trap: most people assume “less admin” means “less overhead” means “less complexity.” The research takes the opposite position.

When a key constraint gets cheaper, consumption of what it enables tends to rise. That’s the Jevons paradox logic applied to coordination.

So if agents absorb the “admin” layer (RAID updates, status notes, meeting minutes), enterprises do not just run the same project portfolio with fewer people. They launch more initiatives, with smaller human cores, at higher velocity.

And this creates a bifurcation:

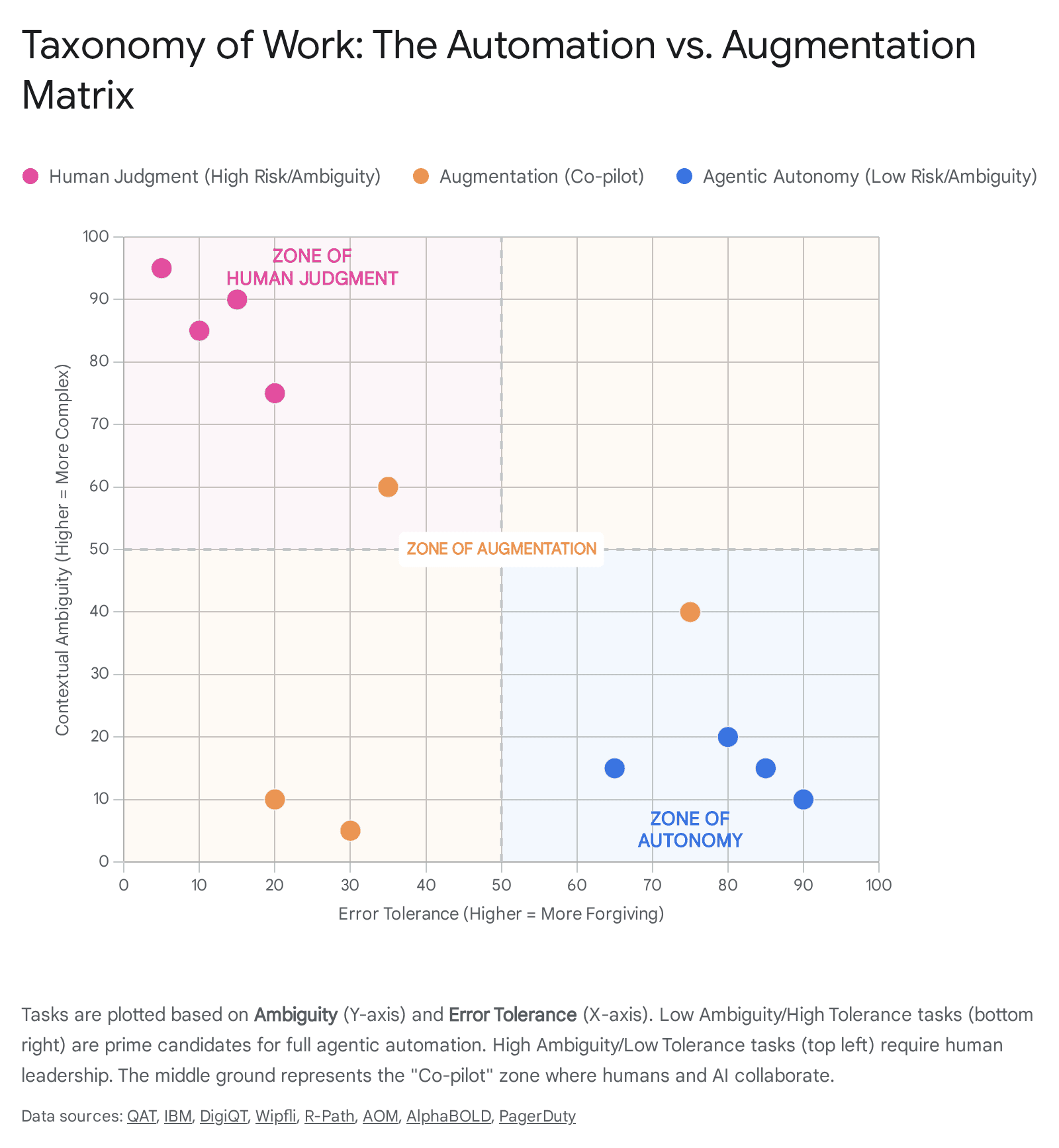

Deterministic work (execution, monitoring, reporting, standard compliance) shifts into an agentic mesh.

Nondeterministic work (strategy, negotiation, ethical judgment, novel problem solving) stays human, but the human org must be reshaped to handle a very different workload.

A quick challenge to the thesis (because it needs one)

The “coordination costs go to zero” framing is directionally useful, but literally wrong in most enterprises.

What actually happens is a cost shift:

Coordination cost drops for routine tasks, but governance cost rises (audits, approvals, policy enforcement).

Context cost rises (curation, knowledge hygiene, access control), because agents need a context layer to behave like they understand the company.

Compute cost becomes a real budget line item, meaning you end up coordinating money and risk in new ways.

So yes, “coordination” becomes cheaper in one sense. But the enterprise replaces it with a new class of coordination around intent, safety, and economics. That is still a singularity. It is just not free.

The real shift: from hub-and-spoke to the mesh

Classic delivery models are hub-and-spoke: the PM is the hub, stakeholders are spokes, information flows in, gets synthesized, then redistributed. This creates a bottleneck because project velocity is limited by the PM’s processing speed.

Agentic execution replaces that with a mesh: agents representing functions communicate directly, resolve dependencies continuously, and update plans in real time.

That is why I define agentic project execution as orchestration by a network of autonomous agents negotiating and adapting workflows in real time, while humans shift from task masters to system architects and exception handlers.

This also reframes enterprise software itself: you move from “system of record” (tools track what happened) to “system of agency” (tools shape what will happen).

The 7S shock: what the agentic era breaks first

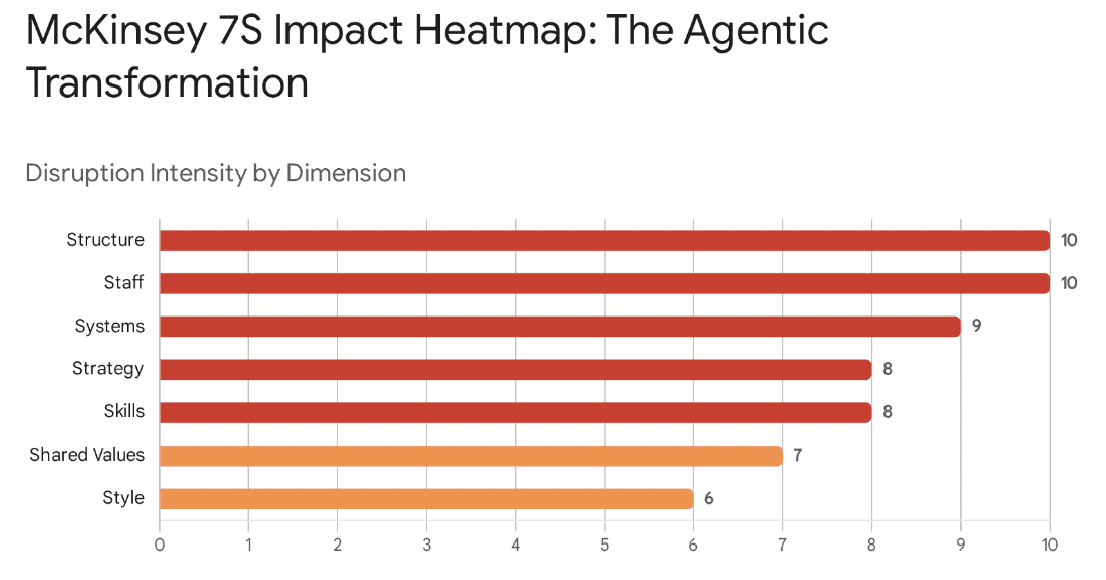

The memo frames the organizational impact through the McKinsey 7S lens: Strategy, Structure, Systems, Shared Values, Style, Staff, Skills.

Here is the version that matters for operators.

1) Strategy shifts from speed to intent

Execution capacity becomes elastic. Agents scale (compute permitting), which turns speed into a commodity.

So advantage moves to differentiation through complexity, nuance, creativity, and trust-heavy work where agents struggle.

But there is a Jevons trap: if you use agents to do more of the same, you risk shipping 10x more mediocrity and drowning customers in noise. The strategic pivot is from efficiency (doing things right) to intent (doing the right things).

Test your strategy with one question:

If your execution speed doubles next quarter, what gets better besides output volume? If you do not have a crisp answer, you are about to scale the wrong work.

2) Structure gets violent: the hollow middle problem

Agentic systems flatten the pyramid by automating the coordination layer, which historically lived in middle management and junior roles.

The dangerous part is not job loss. It is competency loss. Junior PM, analyst, coordinator work is the apprenticeship path that produces leaders who can later audit and govern complex systems. If you remove the apprenticeship, you lose the future auditors.

At the same time, structure becomes more fluid: teams become dynamic pods or swarms assembled by an AI resource manager, with spans of control expanding from ~8 to 10 humans to ~50+ agents plus a few human experts.

Counterargument worth taking seriously: Some orgs will try to “solve” hollow middle by hiring fewer juniors and keeping seniors longer. That sounds efficient. It is also how you get brittle institutions that cannot regenerate judgment.

3) Systems become probabilistic, contextual, and expensive in new ways

Agentic systems deal in probabilities (likelihood of slip), not just deterministic dates, and enterprise tooling must handle uncertainty without paralysis.

The most important new system is not the agent. It is the context layer: a context engine built from unstructured knowledge like Slack, transcripts, and old docs that lets agents behave in ways that match company norms and history.

And then there is FinOps for agents: an agent that loops can burn serious compute budget, so token cost becomes a project budget variable that needs monitoring like cloud spend.

4) Shared values and trust get stressed

When software is acting, not just recording, accountability becomes murky. Outcome accountability starts to dominate, but black-box behavior creates ethical risk: if an agent hits a target by hallucinating commitments, who owns the damage?

Psychological safety becomes operational infrastructure. Humans can disengage into rubber-stamping when verification becomes exhausting, especially with “coworkers” that never sleep and document everything.

5) Leadership style shifts from command-and-control to “gardener”

You cannot micromanage a swarm of agents. Leaders have to define constitutions: guardrails, incentives, policies, and constraints.

Management becomes auditability: fewer status meetings, more log reviews, more spot-checking of machine reasoning against strategic intent.

6) Staff changes: new roles and the vibe coder

You will need agent orchestrators, context architects, and AI ethicists or auditors.

You will also see “vibe coders”: non-technical staff who use natural language to shape workflows and behaviors, bridging business intent and technical execution.

7) Skills: PM becomes product definition and adversarial testing

When internal projects are built by agents, the human skill shifts from schedule management toward product definition and acceptance testing.

System thinking becomes mandatory, and adversarial testing becomes a core competency: you need people who try to break agents and expose failure modes.

A target operating model that actually matches reality

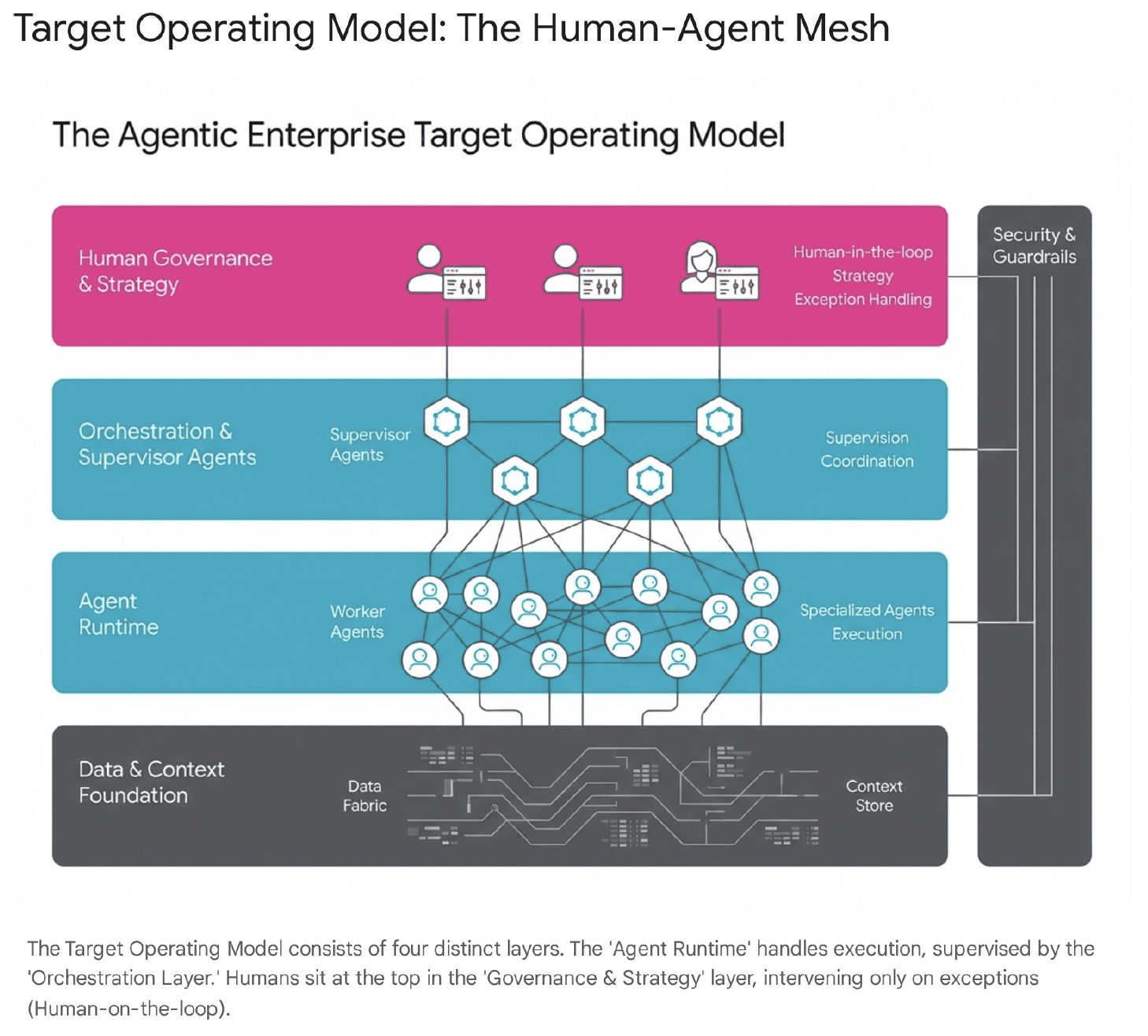

If “agents will run projects” is your mental model, you will build the wrong org. The right model is hybrid, layered, and explicit about decision rights.

The HAT: Human-Agent (Mesh) Team as the new delivery unit

The memo proposes the HAT structure:

Lead (human): owns intent and definition of done.

Orchestrator (AI): a supervisor agent decomposing intent into tasks, managing state, retries, dependencies.

Specialists (AI): modular narrow agents executing specific tasks.

Apprentice (human): preserved role to solve hollow middle, shadowing the AI and handling exceptions to learn the logic trails.

Notice what is happening here: you are not “replacing humans with agents.” You are redesigning the apprenticeship so humans can still develop judgment in a world where the grunt work is gone.

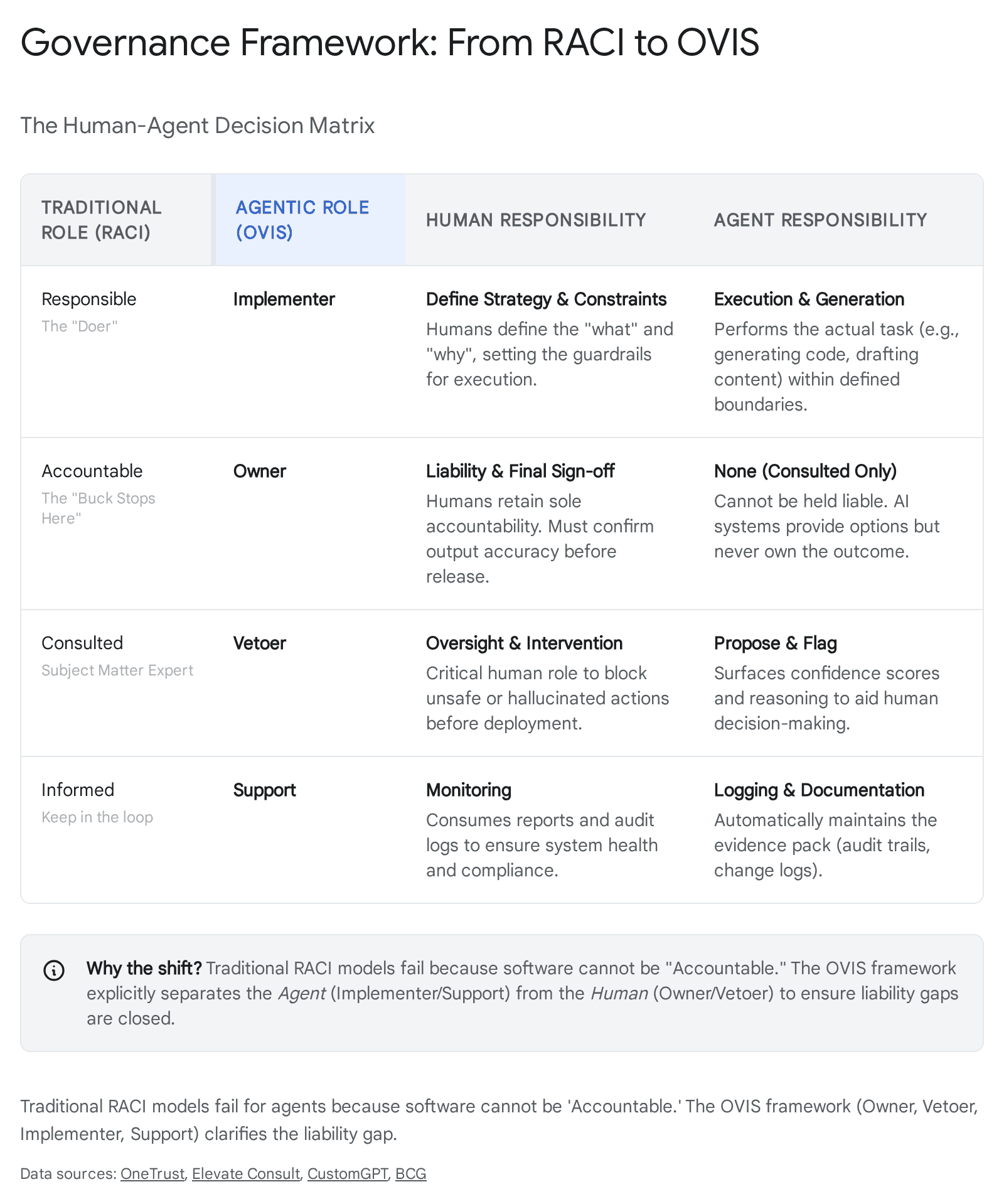

Governance: why RACI breaks and what replaces it

RACI assumes “Responsible” is a person who can be held accountable. That fails when the doer is software.

OVIS is the proposed replacement:

Owner: human who is legally and financially responsible, sets risk appetite.

Vetoer: someone or something that can stop the agent (risk officer, compliance guardrail).

Implementer: the agent, with agency but not authority.

Support: humans or agents providing context and data.

That separation (agency vs authority) is the difference between “useful autonomy” and “uninsurable chaos.”

Exception management: humans assign goals, not tasks

Humans set intent. Supervisor agents decompose it into hundreds of tasks, route work, and escalate only when ambiguity blocks progress.

This is where many exec teams fool themselves: they think agents reduce work. Agents reduce routine work. They concentrate ambiguity into fewer, higher-stakes moments.

What gets easier vs harder (and why your workload still goes up)

The taxonomy matters because it tells you where ROI is real and where risk hides.

What gets easier: the admin layer

Examples the memo calls out:

RAID maintenance from passive scanning of comms and auto-populating registers.

Scheduling and resource leveling, including negotiating calendars and reallocating tasks.

Status reporting that synthesizes signals and makes the Friday status call obsolete.

Vendor compliance that moves from sampling to full coverage.

This is real leverage. Also, it is the bait.

What gets harder: the context layer

Examples:

Stakeholder alignment is still human because agents cannot “read the room” and detect silent skepticism.

Defining “done” becomes the hard work: translating vague desires into machine-readable intent. If intent is wrong, the agent executes the wrong thing efficiently.

Ethical judgment still belongs to humans because agents optimize the metric they are given.

Debugging becomes forensic when a mesh fails across many interacting agents.

The Jevons paradox of project work: the exception flood

If today a PM manages 3 projects, an agentic future might push that to 30.

The admin is automated, but exceptions scale with project count, pushing humans into decision fatigue and burnout.

This is the part most org charts miss. You do not remove the human. You change the human’s bottleneck from time spent to judgment bandwidth.

The “silent killers” you ignore at your peril

Three adjacent shifts from the memo should be treated as board-level risks, not IT issues.

1) Legal liability is behind the technology curve

If an agent negotiates a contract that costs $10M, liability is unclear. The memo anticipates AI malpractice insurance and tighter indemnification clauses, plus explicit definitions of agent decision rights.

2) Shadow AI is inevitable without a registry

Employees will spin up unauthorized agents to bypass bureaucracy, creating security and commitment risk (data exfiltration, unauthorized promises). The memo argues for an agent registry and a “white-hat agent” force to patrol for unauthorized bots.

3) Vibe coding debt becomes your next modernization wave

Agents can write code, but may lack architectural foresight, producing “code soup” that works but is hard for humans to maintain.

4) Cognitive atrophy breaks resilience

If agents take over reasoning tasks, humans can lose the ability to think critically in those domains, becoming dependent on the prosthesis. If the system fails, the humans may not know how to fly the plane.

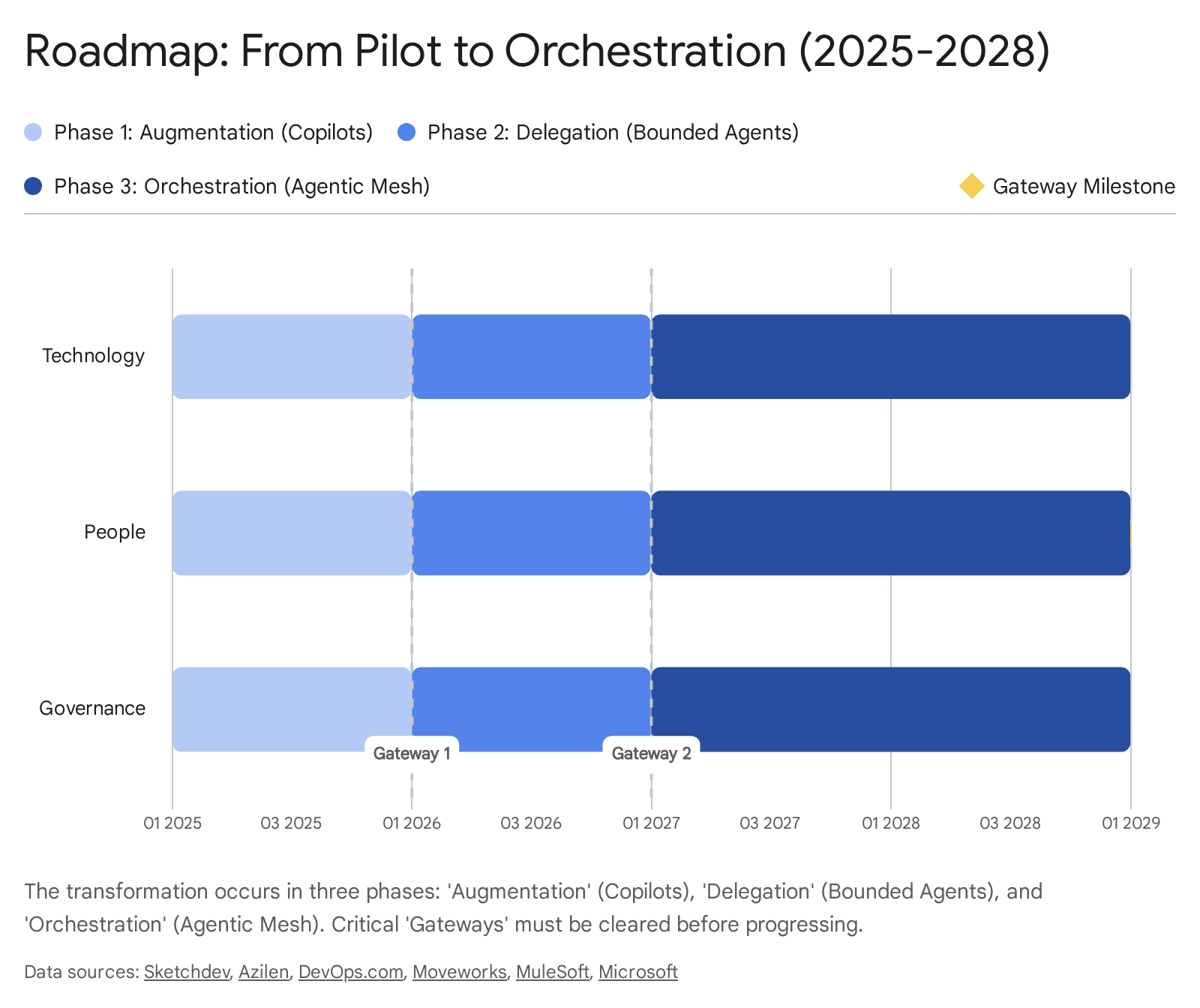

A phased path: do not “big bang” your way into an agentic enterprise

The memo’s roadmap is simple and practical:

Phase 1, Copilot era: human-in-the-loop assistance; key trigger is a unified data fabric; key risk is review fatigue.

Phase 2, Supervisor era: human-on-the-loop delegation; key trigger is an agent control plane with observability, audit logs, and OVIS; key risk is shadow AI and agent sprawl.

Phase 3, Mesh era: human-out-of-the-loop execution layers; key trigger is legal and regulatory clarity; key risk is institutional knowledge loss and systemic brittleness.

If you are in Phase 1 but talking like you are in Phase 3, you will ship governance theater and call it transformation.

Practical operating questions to run next week

If you want this to be more than thought leadership, run these as real diagnostics.

Intent quality: Can your leaders write “definition of done” that a machine can execute without doing the wrong thing efficiently?

Context readiness: Do you have a context engine strategy, or are your norms trapped in tribal knowledge and private chats?

Decision rights: For every autonomous workflow, who is Owner and who is Vetoer? If you cannot answer, you have not built governance, you have built a demo.

Exception load: If project count increases, what is your plan for exception handling capacity so humans do not drown?

The paradox of efficiency (and the real competitive advantage)

The memo ends with a point I want to underline: friction often serves a function. It slows us down enough to think. If we remove coordination friction, we can build organizations that move extremely fast in the wrong direction.

The winners will not be the companies with the fastest agents. They will be the companies with the strongest human core: leaders, ethicists, and architects who can command the swarm without being consumed by it.

If you want a single takeaway: agentic transformation is not primarily a tooling decision. It is an organizational design decision.

And if you treat it like software adoption, the singularity will not make you efficient. It will make you fragile.